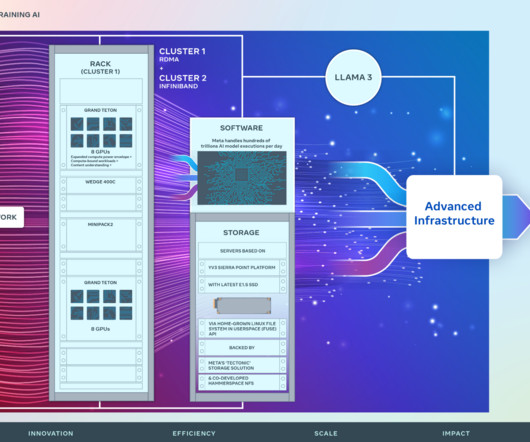

How Meta trains large language models at scale

Engineering at Meta

JUNE 12, 2024

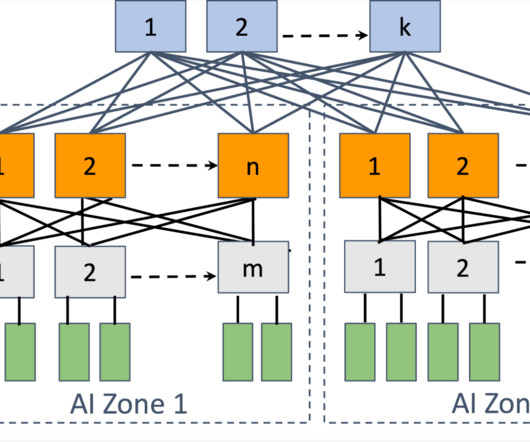

Supporting GenAI at scale has meant rethinking how our software, hardware, and network infrastructure come together. Solving this problem requires a robust and high-speed network infrastructure as well as efficient data transfer protocols and algorithms. requires revisiting trade-offs made for other types of workloads.

Let's personalize your content