Maintaining large-scale AI capacity at Meta

Engineering at Meta

JUNE 12, 2024

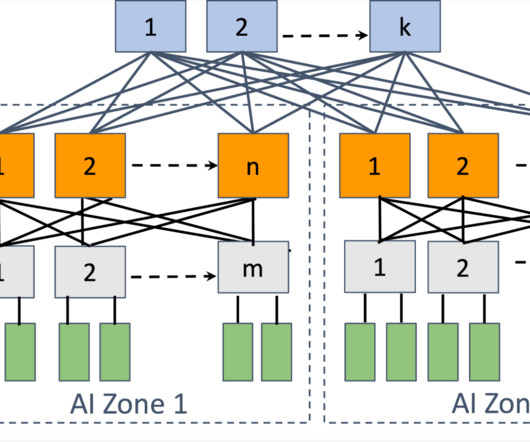

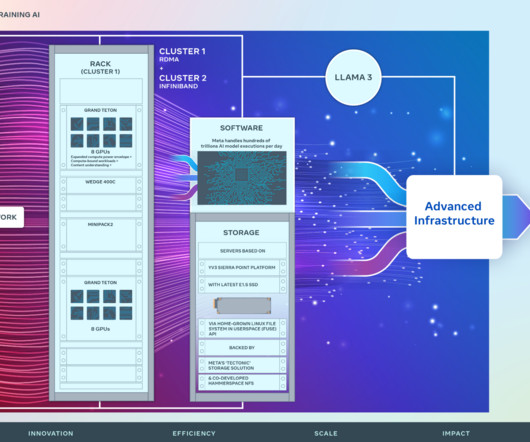

Meta runs different types of backend networks, topologies, and training jobs that have tight dependencies between software and hardware components. Instead, we ensure components are compatible with each other and roll component upgrades up in a sliding fashion. This transition has not been without its challenges.

Let's personalize your content