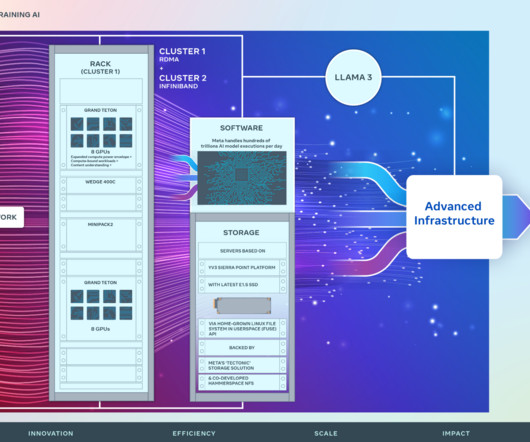

How Meta trains large language models at scale

Engineering at Meta

JUNE 12, 2024

Optimal connectivity between GPUs: Large-scale model training involves transferring vast amounts of data between GPUs in a synchronized fashion. There are several reasons for this failure, but this failure mode is seen more in the early life and settles as the server ages. Both of these options had tradeoffs.

Let's personalize your content