How Meta trains large language models at scale

Engineering at Meta

JUNE 12, 2024

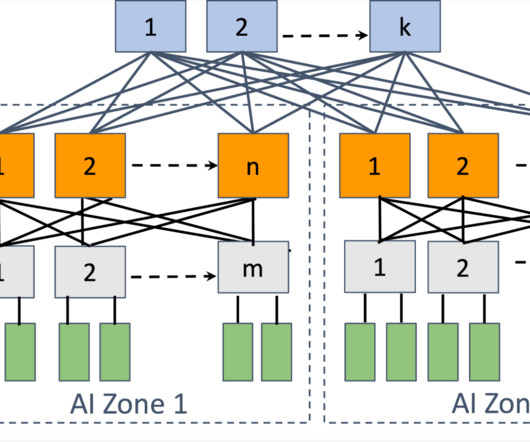

Optimal connectivity between GPUs: Large-scale model training involves transferring vast amounts of data between GPUs in a synchronized fashion. Solving this problem requires a robust and high-speed network infrastructure as well as efficient data transfer protocols and algorithms. Both of these options had tradeoffs.

Let's personalize your content