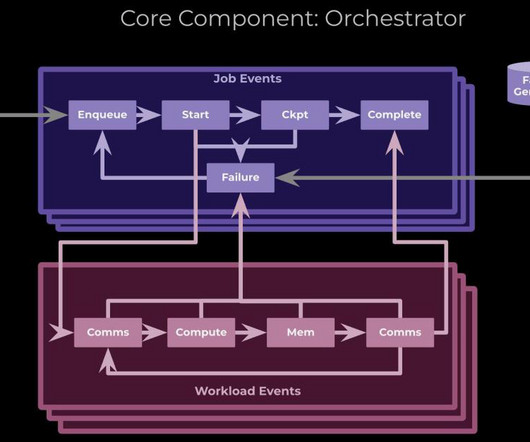

A RoCE network for distributed AI training at scale

Engineering at Meta

AUGUST 5, 2024

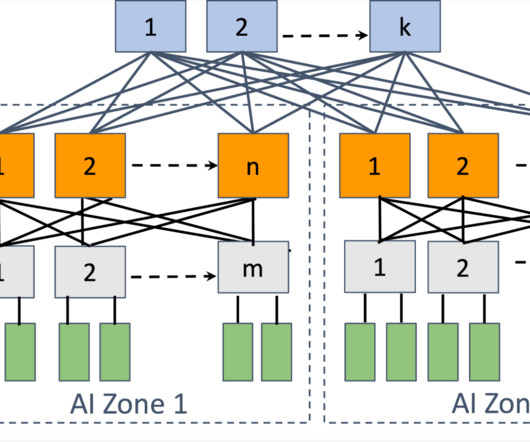

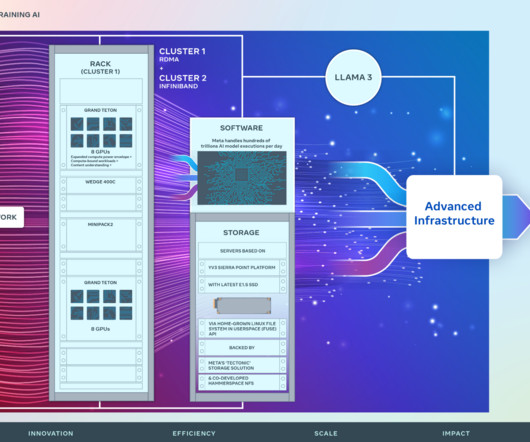

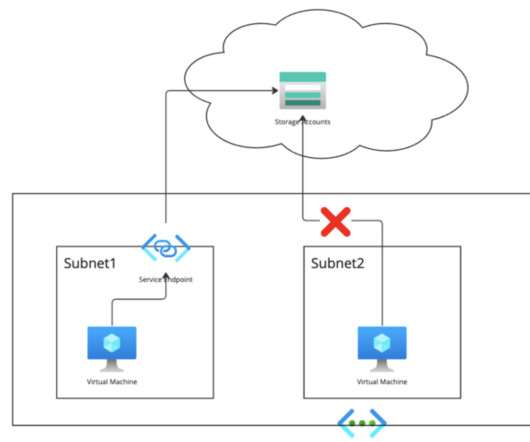

AI networks play an important role in interconnecting tens of thousands of GPUs together, forming the foundational infrastructure for training, enabling large models with hundreds of billions of parameters such as LLAMA 3.1 Distributed training, in particular, imposes the most significant strain on data center networking infrastructure.

Let's personalize your content