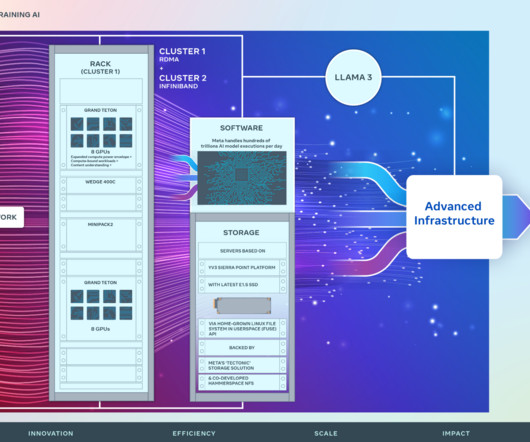

A RoCE network for distributed AI training at scale

Engineering at Meta

AUGUST 5, 2024

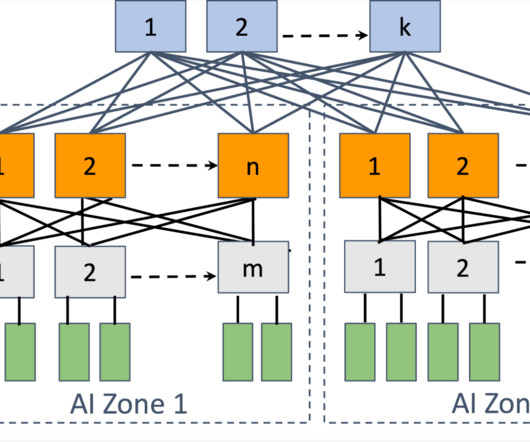

We ensure that there is enough ingress bandwidth on the rack switch to not hinder the training workload. The BE is a specialized fabric that connects all RDMA NICs in a non-blocking architecture, providing high bandwidth, low latency, and lossless transport between any two GPUs in the cluster, regardless of their physical location.

Let's personalize your content